hubs-webrtc-testerHow Mozilla Hubs Uses WebRTC

a web resource by zach fox

Your feedback will help improve this page! You can submit questions and comments about this page via Discord DM (@ZachAtMozilla) or the "🔒private-dev" channel on the Hubs Discord server.

This page is under active development. Language, layout, and contents are subject to change at any time.

This page's source is currently available here on GitHub.

Introduction

This page exists to demystify WebRTC and the way Hubs uses WebRTC technologies so that more people can understand and make better use of Hubs' communication capabilites. Additionally, with a deeper understanding of the Hubs technology stack, more community members will be able to suggest improvements to and fix bugs regarding the WebRTC components of Hubs.

By reading this document, you will:

- Learn the definition of WebRTC

- Understand how WebRTC-based applications transmit and receive voice and video data

- Learn which software libraries are used to power Hubs' WebRTC stack

- Learn how those software libraries are used in Hubs (including code snippets and explanations)

- Learn the answers to frequently asked questions

- Be exposed to snippets of Hubs code that perform specific complex functions

If you have additional questions about Hubs or Hubs' use of WebRTC, Mozilla developers are available to answer your questions on the Hubs Discord server.

What is WebRTC?

WebRTC (Web Real-Time Communication) is an open-source project that allows people to communicate using audio and video via their web browser. Developers can also implement WebRTC technology into applications that are not web browsers, such as Discord.

The WebRTC project defines a set of protocols and APIs. Some of those protocols and APIs are implemented by browser developers. It is then the responsibility of Web application developers to properly make use of those protocols and APIs.

🦆 Hubs uses WebRTC to let people in the same Hub communicate with each other using voice chat and video.

For more technical information about WebRTC, see this WebRTC API MDN document.

WebRTC Communication Data Flow

When two people video chat using a WebRTC-based application, Person A's voice and video data must somehow be transmitted over the Internet and received by Person B.

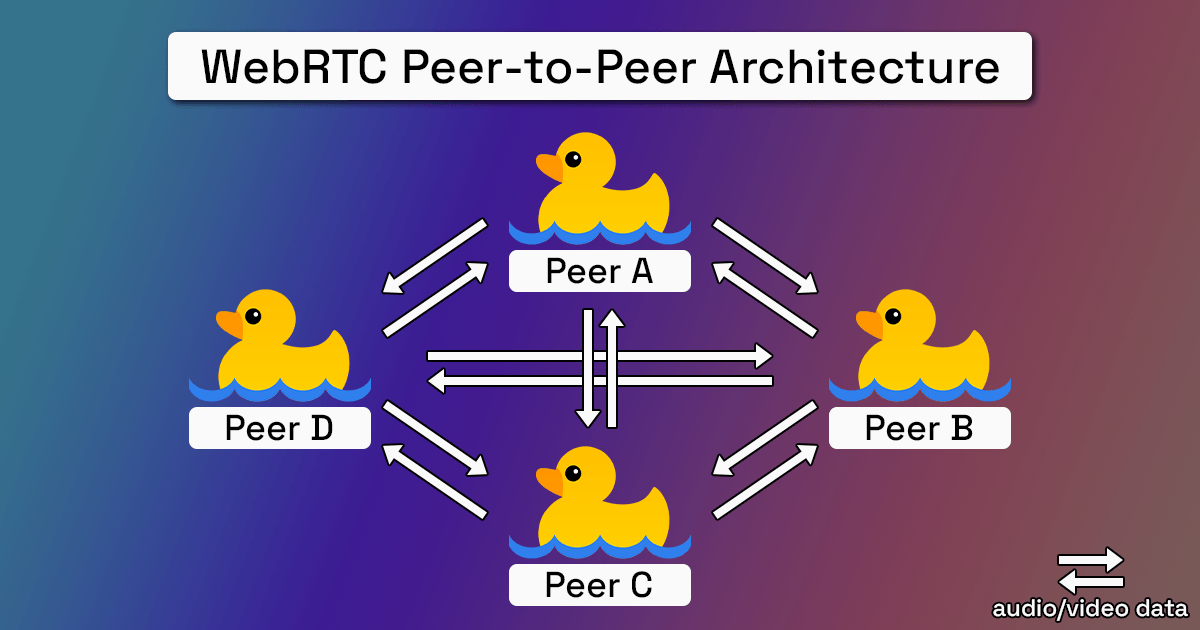

The simplest architecture for sending and receiving this data is for Person A's client to send that data directly to Person B's client. This architecture is called "Peer to Peer" communication.

Peer-to-Peer (P2P) Communication

Hubs does not use P2P communication for voice and video data. However, understanding this architecture is fundamental to understanding more complex communication architectures.

With P2P communication, each peer sends their audio/video data to all other connected peers:

P2P Pros

- Reduced complexity and cost: P2P communication doesn't require the use of any intermediate servers for audio/video data transmission

- Privacy by default: Peers are connected directly via secure protocols

P2P Cons

Upload bandwidth too high with multiple participants: Each peer must upload audio/video data to all other peers.

- CPU usage too high with multiple participants: Each peer must encode their audio/video for each remote peer, and that encoding process is resource-intensive.

The cons noted above make the P2P architecture a non-starter for most applications, since most folks' upload speeds and CPUs are not fast enough to support more than a couple of peers.

Since the P2P architecture won't work for a multi-user application like Hubs, we must select a different communication architecture.

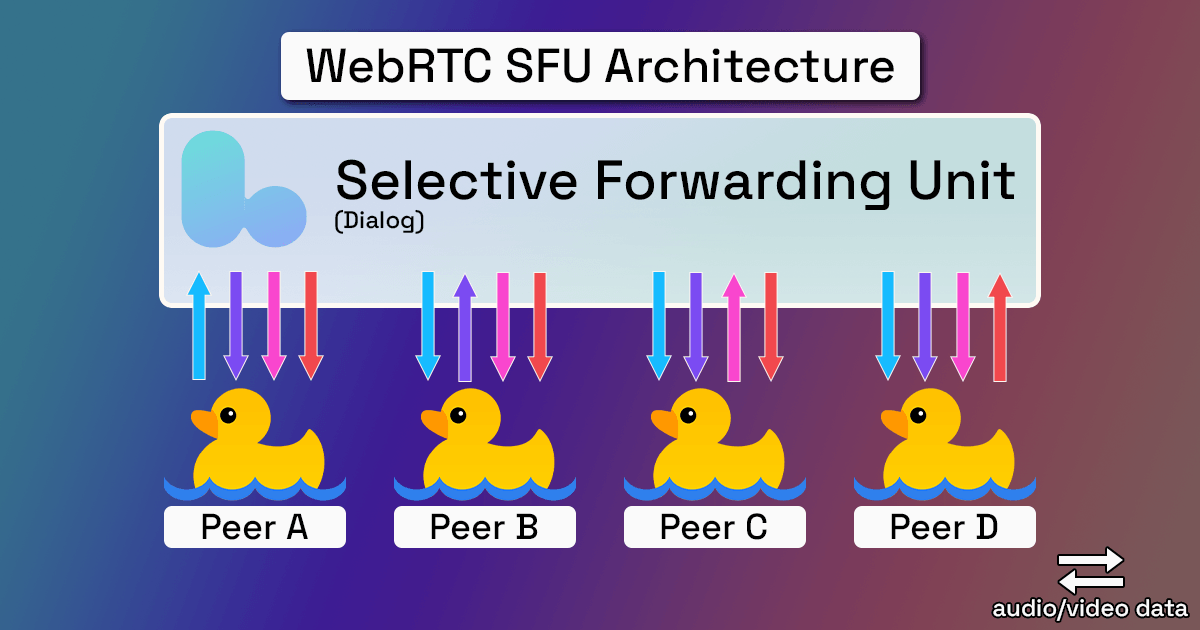

WebRTC Communication with an SFU (The Hubs Way)

The most common WebRTC communication architecture involves the use of a Selective Forwarding Unit, or SFU. A Selective Forwarding Unit is a piece of software that runs on a server. The SFU receives multiple audio/video data streams from its peers. Then, the SFU's logic determines how to forward those data streams to all of the peers connected to it.

The Mozilla Hubs SFU is contained within a server component named Dialog. Dialog is written in NodeJS. You can take a look at Dialog's source code here on GitHub.

Dialog is one of a few named server components that power Hubs. Reticulum is the name of the central server which orchestrates networking between clients and gives information to each client about the Dialog instance associated with a Hub.

In the graphic below, notice how each peer only has to upload its audio/video data to one place: The SFU. The SFU is responsible for sending that data to all of the peers connected to it.

SFU Pros

- Reduced upload bandwidth requirements for peers: Each peer only needs to upload one data stream.

- Reduced CPU requirements for peers: Each peer only needs to encode the data stream once.

- Increased flexibility: For example, each peer can request that the SFU send other peers' data in lower quality.

SFU Cons

Increased system complexity: Someone has to write and maintain complex SFU code.

Server requirements: The hardware powering the SFU needs to be capable of forwarding audio/video data streams from many peers, which can be computationally expensive.

- Audio/video data isn't encrypted by default: The SFU developer could access each peer's audio/video data unless the SFU developer implements end-to-end encryption (TODO: LEARN IF HUBS IMPLEMENTS E2EE FOR VIDEO/VOICE DATA).

There is a third mainstream architecture for WebRTC communication, and it involves using an "MCU," or Multipoint Control Unit. If you'd like to learn about this architecture, check out this article on digitalsamba.com.

For any two WebRTC endpoints to be able to communicate with each other, they must understand each other's network conditions and agree on a method of communication. ("WebRTC endpoints" may refer to two peers in a peer-to-peer network, or one peer and one SFU.)

The process by which two WebRTC endpoints discover each others' network conditions and select a method of communication is called Interactive Connectivity Establishment, or ICE.

There are several networking-related challenges we need to overcome during the ICE process. These challenges are important to understand, since they directly impact the way we implement WebRTC within client and server applications. After we discuss some of these challenges and their solutions, we'll discover what ICE actually does.

Networking in Real-Life Conditions - The Challenges

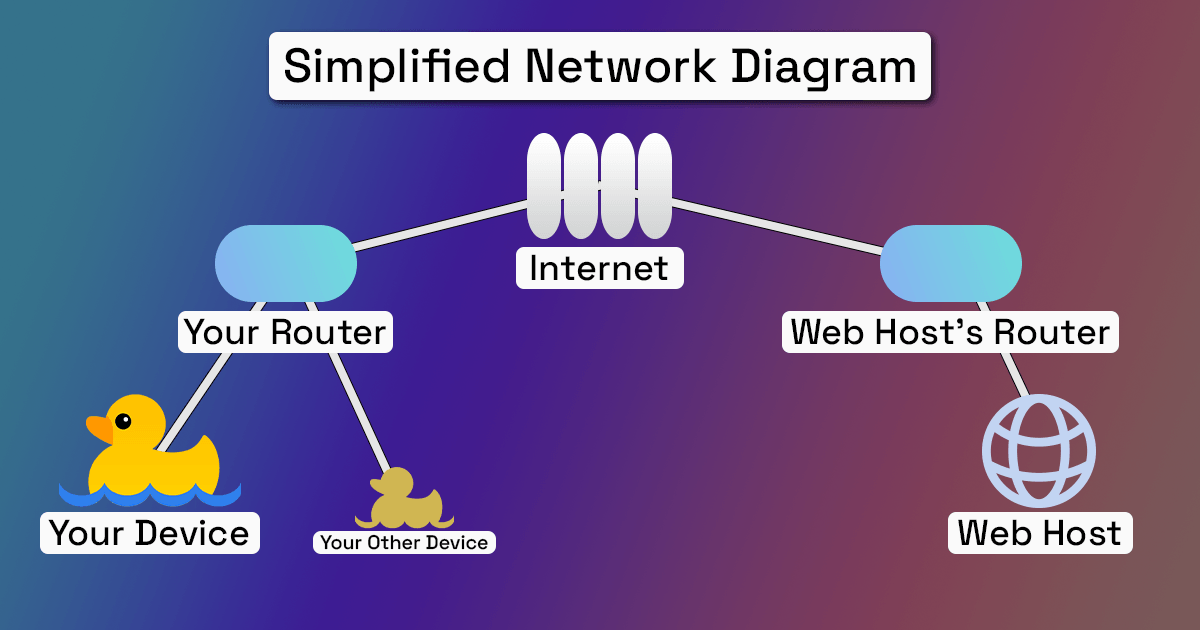

As you read this, your device is probably connected to the Internet via a router. When your device made a request for this website's HTML, JavaScript, and CSS, your router kept track of which device made that request. Then, it knew to return the result of your request to your device, rather than another device on your network.

As the request information packets passed through your networking equipment, your ISP's networking equipment, and this website host's networking equipment, the source and destination IP addresses and ports of the packets were changed until they arrived successfully at the website host's IP address. Then, packets returning from the website host were translated back to the original IP addresses and ports.

This process refers to Network Address Translation, or NAT. The act of changing the information packets' source and destination IP addresses and ports is called creating a NAT mapping.

In most cases, two given WebRTC endpoints will exist on two physically disparate networks, separated by such a router and/or firewall. In those cases, NAT must occur for those endpoints to send and receive media packets.

A Dialog SFU associated with a given Hub is accessible directly via a domain name and port combination, so the only network address translation that must occur in the Hubs case exists on the connecting client's side.

Despite a successful NAT process, there are other potential issues which may prevent two WebRTC endpoints from successfully connecting. For example, your firewall's configuration may contain specific rules which disallow traffic of a certain kind to pass, and those rules may apply to WebRTC media traffic. In other cases, a network configuration may heuristically view WebRTC traffic as suspicious and block it.

It can be frustrating to determine exactly which aspect of a network prevents successful WebRTC packet transmission. The issue could be hardware-related, software-related, or even a disconnected network cable. It's important for WebRTC application developers to gracefully handle as many known connection failure cases as they can, and develop meticulous logging practices for catching unhandled error cases.

STUN (Session Traversal Utilities for NAT)

When trying to establish two-way WebRTC media communication between two endpoints, each endpoint needs to tell the other "here is the IP address and port you might be able to use to send me WebRTC data."

STUN is a protocol which allows a WebRTC endpoint to determine the public IP address and port allocated to it after Network Address Translation has occurred. This IP address and port may then be reused for WebRTC purposes - although it may be the case that this information is unusable (more on that when discussing TURN).

There are several dozen publicly-available STUN servers which provide this service. Here's a huge list of public STUN servers actively maintained by "pradt2" on GitHub.

The Hubs client uses Google's public STUN servers, primarily stun:stun1.l.google.com:19302.

TURN (Traversal Using Relays around NAT)

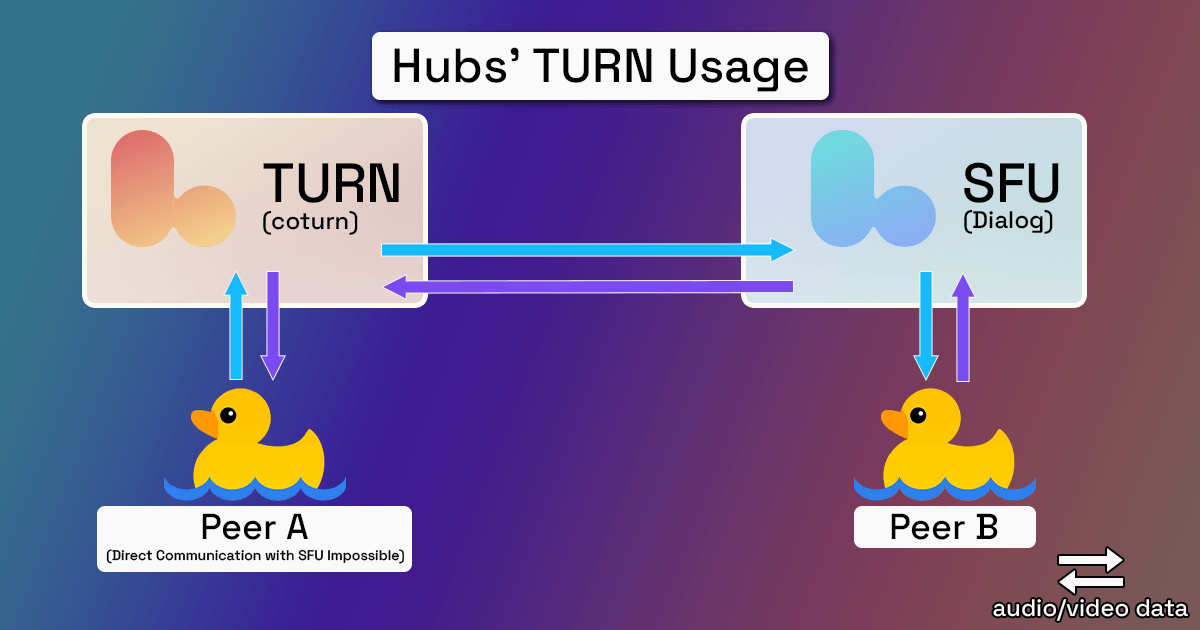

In some cases, even when two WebRTC endpoints know the public IP address and port established for direct WebRTC data transfer, direct communication is impossible. This may occur due to strict firewall rules or incompatible NAT types. Nintendo has published a great document which describes, in simplified terms, NAT type compatibilities.

When direct communciation between two WebRTC endpoints is impossible, Dialog employs a dedicated TURN server. When TURN is necessary, all incoming and outgoing media packets are routed through this TURN server.

Mozilla Hubs uses coturn as its TURN server. Coturn is free and open-source.

It's more performant and less error-prone for a WebRTC endpoint to connect directly with an SFU, rather than routing traffic through a TURN server. A TURN server should only be used if communication is otherwise impossible.

ICE - Interactive Connectivity Establishment

During the ICE process, a WebRTC endpoint will perform candidate gathering to discover all of the potential ways it can connect to another WebRTC endpoint. Candidate gathering is programmed into the endpoint's application code. Here's what that code looks like for the Hubs client:

After candidate gathering, those two WebRTC endpoints will perform candidate nomination and then candidate selection, determining together which of those routes is most optimal.

In Hubs' case, third-party software libraries handle much of candidate nomination and selection. You can learn more about the ways the Hubs client and Dialog perform candidate nomination and selection by skipping to the Mediasoup Transport section of this document.

Hubs' WebRTC Libraries

Protoo and Mediasoup

While it is possible for developers to write code using the bare WebRTC protocols and APIs, it is often useful to simplify app development by using well-tested software libraries that abstract away some of those core concepts.

The Dialog SFU and the WebRTC parts of the Hubs client make significant use of two software libraries: Protoo and Mediasoup. Let's explore what those libraries do, why they're important, and how they're used.

Dialog, the Hubs SFU, uses:

protoo-server - "a minimalist and extensible Node.js signaling framework for multi-party Real-Time Communication applications"

mediasoup - A "C++ SFU and server side Node.js module."

"Signaling" refers to the act of coordinating WebRTC communication between peers. For example, in order for Peer A to recognize the existence and media capabilities of Peer B, a signaling server must liase that communication.

The role of Protoo, Hubs' signaling framework, will become more clear as we continue to walk through the software.

naf-dialog-adapter.js, the primary Hubs client code that communicates with Dialog, uses:

protoo-client - "The protoo client side JavaScript library. It runs in both browser and Node.js environments."

mediasoup-client - A "Client side JavaScript library for browsers and Node.js clients."

The authors of the Protoo library have chosen specific names to refer to signaling-related concepts. In this section, we'll define some of Protoo's relevant concepts and verbosely explore how they're used in Hubs code.

Directly below each term's heading, you'll find links to Protoo's official documentation relevant to that term.

Protoo WebSocketTransport

A WebSocketTransport is a JavaScript class that, when instantiated from the client, attempts to open a WebSocket connection to a specified URL.

WebSockets allow browsers and servers to communicate without the browser needing to constantly poll the server for information. For example, WebSockets are used in WebRTC signaling to inform Peer A that a new Peer B has joined a communication session - without Peer A having to ask the server "hey, are there any new peers here?".

You can learn more about WebSockets via this MDN documentation.

WebSocketTransport Example Usage

From hubs/naf-dialog-adapter.js > DialogAdapter > connect():

connect() {

...

const protooTransport = new protooClient.WebSocketTransport(urlWithParams.toString(), {

retry: { retries: 2 }

});

...

}Translation: Open a new WebSocket connection to a "URL with params" we've dynamically constructed based on the specific Hub to which we're connected. If the connection fails, attempt to retry two more times before closing the WebSocket connection.

We can obtain an example of such a "URL with params" by connecting to the Hubs Demo Promenade:

const urlWithParams = 'wss://geyc4mjogaxdenl4guya.stream.reticulum.io:4443/?roomId=E4e8oLx&peerId=fd25a9c5-ee56-4be8-ad8c-ba44d9247b82'The resultant protooTransport variable will be reused when creating a client-side Protoo Peer.

Protoo Room

A Protoo Room is a JavaScript class that "represents a multi-party communication context". A Room contains a list of Peers.

A Protoo "Room" is different from a Dialog "Room" is different from a Hubs "Room". It can sometimes be challenging to differentiate between the different meanings of the word "Room" within the Hubs codebase.

Room Example Usage

From dialog/lib/Room.js > Room > getCCU():

getCCU() {

if (!this._protooRoom || !this._protooRoom.peers) return 0;

return this._protooRoom.peers.length;

}Translation: The term "CCU" means "Concurrent Users". It can be useful to determine how many users are currently inside a Dialog Room.

Firstly, if this Dialog room doesn't have a Protoo room assicated with it, OR if that Protoo room doesn't have any Protoo Peers associated with it, return 0.

Next, we return the number of elements in the list of Peers contained within the Protoo Room associated with this Dialog Room.

Protoo Peer

A Protoo Peer represents a client connected to a Protoo Room. The concept of a Peer exists on both the Protoo server and the Protoo client.

Protoo Peers can:

Send requests from the client to the Protoo signaling server via

Peer.request(method, [data])- The server will send a response to a request.

- The Hubs client sends many kinds of requests to the Protoo signaling server. For example, the client will send a "join" request to the Protoo server when initially joining a Dialog

Room.

Send notifications from the client to the Protoo signaling server via

Peer.notify(method, [data])- The server will not send a response to a notification.

- The Hubs client does not currently send any notifications, although the Dialog server sends notifications to connected

Peers. For example, the server will send a "peerClosed" notification to all other connectedPeers when a Protoo peer's connection closes.

Peer Example Usage - Client Context

From hubs/naf-dialog-adapter.js > DialogAdapter > connect():

connect() {

...

this._protoo = new protooClient.Peer(protooTransport);

...

this._protoo.on("notification", notification => {

...

switch (notification.method) {

...

case "peerBlocked": {

const { peerId } = notification.data;

document.body.dispatchEvent(new CustomEvent("blocked", { detail: { clientId: peerId } }));

break;

}

...

}

}

...

}Translation: When the Dialog client begins the connection process to the Dialog server, the client instantiates a new Protoo Peer class. That instantiation requires passing a valid WebSocketTransport object, named protooTransport in this case.

Then, the client code sets up a number of handlers for events that will originate from the Protoo server via our WebSocket connection.

One of those events is called "notification". Notification events contain arbitrary data, and the client cannot respond to notification events.

We direct the application to a specific codepath based on the value of the "method" key included with the notification payload. In this example, that "method" is the string "peerBlocked".

We then retrieve the unique, alphanumeric peerId from the notification payload's data. This peerId is associated with a remote Peer included in the Protoo Room to which we are connected.

Finally, we dispatch a custom event to the browser's document body whose name is "blocked" and whose payload includes the remote peerId. At this point, some other Hubs client code - already set up to listen to "blocked" events - will consume this event and take some action.

The authors of the Mediasoup library - who are the same as the authors of the Protoo library - have chosen specific names to refer to SFU-related concepts. In this section, we'll define some of Mediasoup's relevant concepts and verbosely explore how they're used in Hubs code.

Directly below each term's heading, you'll find links to Mediasoup's official documentation relevant to that term.

As we discuss Mediasoup concepts, note that Protoo doesn't have the concept of "audio/video media," and Mediasoup doesn't have the concept of Rooms or Peers. Those concepts come together in Dialog and in the client's Dialog Adapter.

Mediasoup Producer

Producer - Client Context

In the client context, a Mediasoup Producer is a JavaScript class that represents an audio or video source to be transmitted to and injected into a server-side Mediasoup Router.

Producers are contained within Transports, which define how the media packets are carried.

Producer Example Usage - Client Context

From hubs/naf-dialog-adapter.js > DialogAdapter > setLocalMediaStream():

async setLocalMediaStream(stream) {

if (!this._sendTransport) {

console.error("...");

return;

}

...

await Promise.all(stream.getTracks().map(async track => {

if (track.kind === "audio") {

...

if (this._micProducer) {

...

} else {

this._micProducer = await this._sendTransport.produce({

track,

...

});

...

}

}

}))

}Translation: setLocalMediaStream() is called by the client in a few cases, including when the client first joins a Dialog room, and when the client's audio input device changes. The stream variable passed to the function is a MediaStream object, such as that returned by getUserMedia()

First, we must make sure we've already created a "Send Transport" locally. This Transport is created when initially joining a Dialog Room.

await Promise.all(...) means "fulfill this one Promise when all of the passed Promises have fulfilled, or reject this one Promise when any one of the passed Promises has rejected."

Next, we split the input stream into its constituent tracks, which are of type MediaStreamTrack. In most cases, the MediaStream associated with a person's audio input device consists of just one track.

If the "kind" of track we're processing is an "audio" track (as opposed to a "video" track), and we've previously created a Mediasoup Producer, we execute a special case of code.

However, if this is an "audio" track and this is the first time we're producing, we have to create a new Producer by calling this._sendTransport.produce(). Calling this function tells the Transport to begin sending the specified audio track to the remote Mediasoup Router as per the specified options.

We can later pause, resume, and close the resultant Producer.

Critically, none of the Mediasoup-related code above has anything to do with signaling (which is handled by Protoo). This signaling component is necessary for getting the media stream from Peer A to all other clients connected to a Dialog Room. Let's quickly follow through the client side of that signaling piece here:

From hubs/naf-dialog-adapter.js > DialogAdapter > createSendTransport():

this._sendTransport.on("produce", async ({ kind, rtpParameters, appData }, callback, errback) => {

...

try {

const { id } = await this._protoo.request("produce", {

transportId: this._sendTransport.id,

kind,

rtpParameters,

appData

});

callback({ id });

} catch (error) {

...

errback(error);

}

});This code snippet says: "When the Mediasoup _sendTransport starts producing, let the Protoo signaling server know that this client has started producing." Below, as we discuss the server-side Producer, we'll make more sense of what that means.

Producer - Server Context

In the server context, a Mediasoup Producer is a JavaScript class that represents an audio or video source being injected into a server-side Mediasoup Router.

Producers are contained within Transports, which define how the media packets are carried.

Producer Example Usage - Server Context

From dialog/lib/Room.js > Room > _handleProtooRequest():

async _handleProtooRequest(peer, request, accept, reject) {

const router = this._mediasoupRouters.get(peer.data.routerId);

switch (request.method) {

...

case 'produce':

...

const transport = peer.data.transports.get(transportId);

if (!transport) throw new Error('...');

...

const producer = await transport.produce(

{

kind,

...

});

for (const [ routerId, targetRouter ] of this._mediasoupRouters)

{

if (routerId === peer.data.routerId) {

continue;

}

await router.pipeToRouter({

producerId : producer.id,

router : targetRouter

});

}

peer.data.producers.set(producer.id, producer);

...

for (const otherPeer of this._getJoinedPeers({ excludePeer: peer }))

{

this._createConsumer(

{

consumerPeer : otherPeer,

producerPeer : peer,

producer

});

}

break;

}

}Translation: _handleProtooRequest() is called on the Dialog server when a Protoo signaling request is received. Dialog will always formulate and send a response to Protoo requests.

When the Dialog server receives a Protoo signaling request with method "produce" - as will occur when a Peer begins producing media from the client - we'll run the code above within the switch statement.

First, the Dialog server must obtain the "Send Transport" it previously created and stored associated with this Peer. This Transport was created at the client's signaling request when the client joined the Dialog room. If we don't find that Send Transport, we fail and return early.

We then create a new Producer on the server associated with that Send Transport.

In the current iteration of Hubs and Dialog, there's only ever a single element in the this._mediasoupRouters Map. So, we will never pipe any data between Routers; we'll hit that continue statement.

Dialog then keeps track of the Producer objects associated with a given Protoo Peer's ID in a Map. This is necessary for pausing, resuming, and closing that Producer later.

We then create Dialog Consumers on the server associated with every other Peer who is marked as having joined the Dialog room. We'll learn about Consumers below.

Mediasoup Consumer

Consumer - Client Context

In the client context, a Mediasoup Consumer is a JavaScript class that represents an audio or video source being transmitted from a server-side Mediasoup Router to the client application.

Consumers are contained within Transports, which define how the media packets are carried.

Consumer Example Usage - Client Context

From hubs/naf-dialog-adapter.js > DialogAdapter > connect() > _protoo.on("request") callback:

this._protoo.on("request", async (request, accept, reject) => {

switch (request.method) {

case "newConsumer":

const { peerId, producerId, id, kind, rtpParameters, appData, ... } =

request.data;

try {

const consumer = await this._recvTransport.consume({

id,

producerId,

kind,

rtpParameters,

appData: { ...appData, peerId }

});

this._consumers.set(consumer.id, consumer);

...

accept();

this.resolvePendingMediaRequestForTrack(peerId, consumer.track);

this.emit("stream_updated", peerId, kind);

} catch (err) {

error('...');

throw err;

}

break;

}

}Translation: We call _createConsumer() in a couple of cases, primarily when the Dialog server receives a Protoo signaling request with method "produce". This happens when a Peer begins newly producing, as in the Producer code we walked through above.

First, the Dialog server must obtain the "Receive Transport" it previously created and stored associated with this Peer. This Transport was created at the client's signaling request when the client joined the Dialog room. If we don't find that Receive Transport, we fail and return early.

Next, we initialize a paused Consumer on top of that Transport, given the RTP capabilities of the Consumer. "RTP" stands for "Real-time Transport Protocol", and "RTP capabilities" refer to the media codecs and types that a consumer can consume. They are set by the remote Peer when joining the Dialog Room.

We then store data about that new Consumer in two Maps, which are used in cases such as pausing/resuming the Consumer, or blocking other Peers.

We then try to signal to the relevant Protoo Peer via a "newConsumer" request that the Peer needs to begin consuming some media. In this context, that Peer is a Hubs client.

After we get a response to that "newConsumer" request, we resume this consumer. That'll trigger the remote endpoint to begin receiving the first RTP media packet.

Consumer - Server Context

In the server context, a Mediasoup Consumer is a JavaScript class that represents an audio or video source being forwarded from a server-side Mediasoup Router to a particular endpoint.

Consumers are contained within Transports, which define how the media packets are carried.

Consumer Example Usage - Server Context

From dialog/lib/Room.js > Room > _createConsumer():

async _createConsumer({ consumerPeer, producerPeer, producer }) {

const transport = Array.from(consumerPeer.data.transports.values()).find((t) => t.appData.consuming);

if (!transport) { return; }

let consumer;

try {

consumer = await transport.consume(

{

producerId : producer.id,

rtpCapabilities : consumerPeer.data.rtpCapabilities,

paused : true

});

} catch (error) {

return;

}

consumerPeer.data.consumers.set(consumer.id, consumer);

consumerPeer.data.peerIdToConsumerId.set(producerPeer.id, consumer.id);

...

// Setup Consumer event handlers...

...

try {

await consumerPeer.request(

'newConsumer',

{

peerId : producerPeer.id,

producerId : producer.id,

id : consumer.id,

kind : consumer.kind,

rtpParameters : consumer.rtpParameters,

type : consumer.type,

appData : producer.appData,

producerPaused : consumer.producerPaused

});

await consumer.resume();

...

} catch (error) {

return;

}

}Translation: We call _createConsumer() in a couple of cases, primarily when the Dialog server receives a Protoo signaling request with method "produce". This happens when a Peer begins newly producing, as in the Producer code we walked through above.

First, the Dialog server must obtain the "Receive Transport" it previously created and stored associated with this Peer. This Transport was created at the client's signaling request when the client joined the Dialog room. If we don't find that Receive Transport, we fail and return early.

Next, we initialize a paused Consumer on top of that Transport, given the RTP capabilities of the Consumer. "RTP" stands for "Real-time Transport Protocol", and "RTP capabilities" refer to the media codecs and types that a consumer can consume. They are set by the remote Peer when joining the Dialog Room.

We then store data about that new Consumer in two Maps, which are used in cases such as pausing/resuming the Consumer, or blocking other Peers.

We then try to signal to the relevant Protoo Peer via a "newConsumer" request that the Peer needs to begin consuming some media. In this context, that Peer is a Hubs client.

After we get a response to that "newConsumer" request, we resume this consumer. That'll trigger the remote endpoint to begin receiving the first RTP media packet.

Mediasoup Device

A Mediasoup Device is a client-side JavaScript class that represents an endpoint used to connect to a Mediasoup Router to send and/or receive media.

A Device is an application's entrypoint into the Mediasoup client library; Transports are created from Devices, and Producers/Consumers are then created from Transports.

Device Example Usage

From hubs/naf-dialog-adapter.js > DialogAdapter > _joinRoom():

async _joinRoom() {

this._mediasoupDevice = new mediasoupClient.Device({});

const routerRtpCapabilities = await this._protoo.request("getRouterRtpCapabilities");

await this._mediasoupDevice.load({ routerRtpCapabilities });

...

}Translation: _joinRoom() is called as soon as we open the Protoo signaling connection.

Immediately after that happens, we instantiate a new Mediasoup Device, specifying no options. By not specifying any options to this function, we're letting the Mediasoup library choose a suitable WebRTC handler associated with the client's current browser.

We then ask the Protoo signaling server for the associated Mediasoup Router's RTP capabilities. RTP capabilities define what media codecs and RTP extensions the router supports.

Given those RTP capabilities, we can then call load() on our Device. The Device must be loaded and ready in order to produce and consume media.

Mediasoup Transport

In both the client and server contexts, a Mediasoup Transport is a JavaScript class that uses the network to connect a client-side Mediasoup Device to a server-side Mediasoup Router. A Transport enables sending media via a Producer or receving media via a Consumer, but not both at the same time.

A Mediasoup Transport makes use of the RTCPeerConnection WebRTC interface.

In the context of Transports, it's important to understand ICE, STUN, and TURN. For a refresher, tap here to scroll up to the "ICE, STUN, and TURN" section of this document.

Transport Example Usage - Client Context

From hubs/naf-dialog-adapter.js > DialogAdapter > createSendTransport() > _sendTransport.on("connectionstatechange") callback:

this._sendTransport.on("connectionstatechange", connectionState => {

//this.checkSendIceStatus(connectionState); (Function call expanded below.)

if (connectionState === "failed") {

//this.restartSendICE(); (Function call expanded below.)

if (!this._protoo || !this._protoo.connected) {

return;

}

try {

if (!this._sendTransport?._closed) {

await this.iceRestart(this._sendTransport);

} else {

const { host, turn } = this._serverParams;

const iceServers = this.getIceServers(host, turn);

await this.recreateSendTransport(iceServers);

}

} catch (err) {

...

}

}

});Translation: The client's this._sendTransport "Send Transport" is responsible for holding the "Mic Producer" and transmitting the audio input data over the network. (Tap here to scroll up to the Producer example in this document.)

This event handler code fires anytime the Send Transport's connection state changes. A Transport's connection state is always one of the following values:

"closed""failed""disconnected""new""connecting""connected"

You can find a description for each of these states here: MDN/RTCPeerConnection/connectionState

In the case of this code, we're only doing something unique when the Send Transport's connection state changes to "failed." This happens when "one or more of the ICE transports on the connection is in the failed state."

The WebRTC spec recommends performing an ICE Restart in this case, which is what the above code does in various ways depending on the application's current state.

There may be an opportunity to optimize or improve this particular snippet of source code - connection state changes are a common source of application failure.

The client-side Dialog adapter sets up a very similar event handler for the Receive Transport, this._recvTransport.

Transport Example Usage - Server Context

From dialog/lib/Room.js > Room > _handleProtooRequest():

async _handleProtooRequest(peer, request, accept, reject) {

const router = this._mediasoupRouters.get(peer.data.routerId);

switch (request.method) {

...

case 'createWebRtcTransport':

const {

forceTcp,

producing,

consuming,

sctpCapabilities

} = request.data;

const webRtcTransportOptions =

{

...config.mediasoup.webRtcTransportOptions,

enableSctp : Boolean(sctpCapabilities),

numSctpStreams : (sctpCapabilities || {}).numStreams,

appData : { producing, consuming }

};

if (forceTcp)

{

webRtcTransportOptions.enableUdp = false;

webRtcTransportOptions.enableTcp = true;

}

const transport = await router.createWebRtcTransport(

webRtcTransportOptions);

peer.data.transports.set(transport.id, transport);

...

// Setup transport event handlers...

...

accept(

{

id : transport.id,

iceParameters : transport.iceParameters,

iceCandidates : transport.iceCandidates,

dtlsParameters : transport.dtlsParameters,

sctpParameters : transport.sctpParameters

});

...

break;

}

}Translation: The client sends a "createWebRtcTransport" signaling request via Protoo for its Send Transport and Receive Transport separately upon first joining the Dialog Room.

When the Dialog server receives such a signaling request, it must create a server-side Transport given certain parameters from the client and from Dialog's configuration. Gathering and setting those parameters is a majority of the code above.

Once the new Transport is created, it is associated with the Protoo Peer who sent the request to create a new Transport.

Finally, data about the Transport is sent back to the client that requested the new Transport. That data includes the Transport's:

- ID: A string used to uniquely identify the Transport

- ICE Parameters: Information used to authenticate with the specified ICE server

- ICE Candidates: The ICE servers at which the Transport can be reached

- DTLS Parameters: Encryption information used to create a secure Transport connection

- SCTP Parameters: SCTP is disabled in Hubs' case, so this will be

undefined

The client will then use that information to create a Send or Receive Transport locally. Subsequently, after the client's Transport is connected, the client will send a "connectWebRtcTransport" signaling request to establish a secure DTLS connection.

Dialog Processes Demystified

Here, we contextualize and explore various internal processes that occur relative to the Hubs client, Reticulum, and Dialog:

Dialog Connection Process

We outline here the steps that the client takes to connect to a Dialog instance and begin sending/receiving media.

This outline does not cover error handling. If an error occurs at any point during this complex connection process, the client must attempt to recover. There may be unhandled error cases in this process. Such error cases can be tough to find, and may be the source of unresolved WebRTC-related bugs.

| File | Function | Translation |

|---|---|---|

hub.js | | Fires upon page load |

hub.js | | Join the Reticulum (Phoenix) WebSocket Channel associated with this Hub |

hub.js | | We successfully joined Reticulum and have received Dialog connection data; call this function |

hub.js | | Call into the Dialog adapter with the Dialog connection data |

| Dialog Adapter | | Connect to the Protoo signaling server |

| Dialog Adapter | | Set up Protoo request handler for |

| Dialog Adapter | | The Protoo signaling connection is open; call this function |

| Dialog Adapter | | Create a Mediasoup Device |

| Dialog Adapter | | Perform ICE candidate gathering based on info from Reticulum |

| Dialog Adapter | | Create Send and Receive |

| Dialog Adapter | | When the Send Transport is created and connected, this callback fires. (The same happens for the Receive Transport.) |

| Dialog Adapter | | Make a signaling request to send Dialog our DTLS parameters for secure Transport connections |

| Dialog Adapter | | Set up a callback so that later, when a local Producer starts producing, we use this callback to tells Dialog to create a Consumer |

| Dialog Adapter | | Send a Protoo signaling request to "join". We become a new Dialog Peer, and begin consuming existing peers' media producers. |

Dialog Room Migration Process

We outline here the steps that the client takes when moving between two Hubs rooms on the same origin host. This can happen when clicking on a link in Hub A to Hub B, or when the user clicks the "back" button in their browser and the previous page was a Hub on the same host.

| File | Function | Translation |

|---|---|---|

open-media-button.js | | Called when a "visit room" link is tapped in the 3D environment. |

change-hub.js | | This function involves calls to many other client systems, most of which are not covered in this translation. In order to migrate to another Dialog instance, we must first obtain data about that Hub from Reticulum, including the host, port number, and Hub ID. Once the client has that data, we can disconnect from the current Dialog server and connect to the new one. This is done in the same way as in "Dialog Connection Process" above. |

How does the Hubs client process remote audio streams into spatialized 3D sound?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Etiam at quam neque. Sed convallis leo quis vehicula auctor. Aenean lacinia eros vestibulum magna rutrum elementum. Quisque iaculis malesuada quam. Cras tempus nibh odio, ut tincidunt turpis fringilla id. Praesent nisl odio, tincidunt in justo eu, ullamcorper dapibus ligula. Sed eu lectus vitae mi fermentum tincidunt eu vitae eros. Suspendisse tempus nulla mi, ut sodales leo tempor vel. Etiam vehicula quis lorem eget commodo. Integer tellus ante, efficitur ut urna vel, auctor viverra arcu.

How many Hubs clients can connect to one Dialog instance?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Etiam at quam neque. Sed convallis leo quis vehicula auctor. Aenean lacinia eros vestibulum magna rutrum elementum. Quisque iaculis malesuada quam. Cras tempus nibh odio, ut tincidunt turpis fringilla id. Praesent nisl odio, tincidunt in justo eu, ullamcorper dapibus ligula. Sed eu lectus vitae mi fermentum tincidunt eu vitae eros. Suspendisse tempus nulla mi, ut sodales leo tempor vel. Etiam vehicula quis lorem eget commodo. Integer tellus ante, efficitur ut urna vel, auctor viverra arcu.

What are some ways the Hubs team is considering improving Dialog's capacity?

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Etiam at quam neque. Sed convallis leo quis vehicula auctor. Aenean lacinia eros vestibulum magna rutrum elementum. Quisque iaculis malesuada quam. Cras tempus nibh odio, ut tincidunt turpis fringilla id. Praesent nisl odio, tincidunt in justo eu, ullamcorper dapibus ligula. Sed eu lectus vitae mi fermentum tincidunt eu vitae eros. Suspendisse tempus nulla mi, ut sodales leo tempor vel. Etiam vehicula quis lorem eget commodo. Integer tellus ante, efficitur ut urna vel, auctor viverra arcu.

Code Sample Library (Advanced)

Here you'll find contextualized examples of code found throughout the Hubs/Dialog codebases. It can be challenging to find the exact code that answers a specific question about program flow. You may find your answer in this section.

Sending TURN Information to the Hubs Client

By following the chronological code path below, you will learn:

- How the Reticulum server code determines what TURN information to send to the client

- How, upon initial connection to Dialog, the client code makes use of that TURN information

TODO: Make sure that the Elixir function below is what's actually run when someone joins a Hubs room. The .receive("ok", async data => { is throwing me off.

Conclusion

With a more thorough understanding of WebRTC, Dialog, and Hubs as a whole, you are now equipped to critically explore and make meaningful contributions to the Hubs codebases:

- Hubs client

- Dialog SFU and Signaling Server

- Reticulum Phoenix Server

- hubs-compose - A Docker Componse setup for locally running all Hubs components

If you have additional questions about Hubs or Hubs' use of WebRTC, Mozilla developers are available to answer your questions on the Hubs Discord server.